Requirements

You are going to need Macports. This is quite a useful utility that manages all the terminal-based tools automatically. Go to

this link and install the *.dmg package. Just make sure you don't have similar utilities already installed, like Homebrew or Fink, because you might have troubles, such as version mismatches at /usr/bin .

Download and Install via Macports:

sudo port install git-core

sudo port install libtool

sudo port install libusb +universal

The "plus universal" parameter is used for x86 (aka 32-bit) libraries.

Install OpenKinect

OpenKinect is fuckin great open source project for Kinect owners that want to play, code, create, develop and generally have sex with computer vision research subjects. Createa folder under Documenter or whatever and clone the project from GitHub.

git clone git://github.com/OpenKinect/libfreenect.git

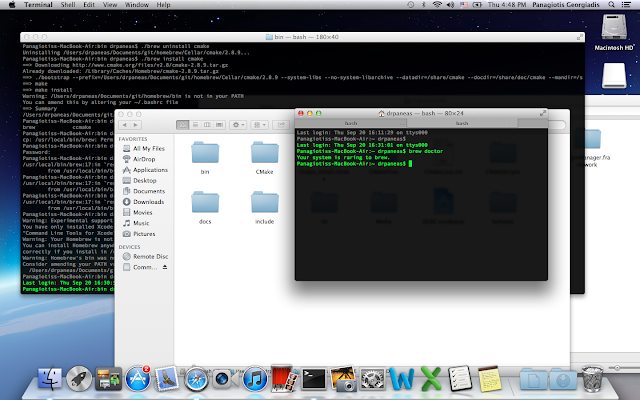

In case you don't have cmake installed, just do it:

Open the GUI version and configure and generate the necessary files for XCode (supposing you have XCode already installed. Don't ya?). Then browse into the build directory and run the

libfreenect.xcodeproj. Normally, you gonna see the source code project inside Xcode interface. Then apply any "must-click-to-proceed" messages and press CMD+B (aka Build and Compile).

Now go to the blah/bin/Debug folder and there will be these executables:

cppview glview record regview

glpclview hiview regtest tiltdemo

Ok now, plug in your Kinect sensor via USB and have sex with object detection. Also, feel free to make the generally available through the /usr/bin directory. If that's the case go to /blah/bin/Debug folder and copy-paste the files to /usr/bin dir.

sudo cp * /usr/bin/

Now run

glview

Ok, now let's make some fun!

Install OpenNI

What's this,

you may ask. This is an open source framework – the OpenNI framework – which provides an application programming interface (API) for writing applications utilizing natural interaction. This API covers communication with both low level devices (e.g. vision and audio sensors), as well as high-level middleware solutions (e.g. for visual tracking using computer vision).

That has been told, download the latest UNSTABLE source code for Mac/Linux at

Select OpenNI Binaries in the first drop down menu

Select "Unstable" in the second drop down menu

OpenNI Unstable Build for Mac OS X 10.7 (latest version)

Click on "Download"

Ok, when you download the file, extract it under the base OpenKinect folder (or whatever you want) and run the install script.

sudo ./install.sh

Normally, you gonna see

Installing OpenNI

****************************

copying shared libraries...OK

copying executables...OK

copying include files...OK

creating database directory...OK

registering module 'libnimMockNodes.dylib'...OK

registering module 'libnimCodecs.dylib'...OK

registering module 'libnimRecorder.dylib'...OK

creating java bindings directory...OK

Installing java bindings...OK

*** DONE ***

Okie dokie

Install SensorKinect

Download the latest version of the Unstable SensorKinect https://github.com/avin2/SensorKinect and extract it in your kinect folder.

Go to the "bin" folder.

Extract the file "SensorKinect093-Bin-MacOSX-v5.1.2.1.tar.bz2" in the same bin folder.

Terminal, go to the bin folder and run the command:

sudo ./install.sh

you gonna see:

Installing PrimeSense Sensor

****************************

creating config dir /usr/etc/primesense...OK

copying shared libraries...OK

copying executables...OK

registering module 'libXnDeviceSensorV2KM.dylib' with OpenNI...OK

registering module 'libXnDeviceFile.dylib' with OpenNI...OK

copying server config file...OK

setting uid of server...OK

creating server logs dir...OK

*** DONE ***

Install PrimeSense NITE

Download the latest version of PrimeSense on:

Select OpenNI Compliant Middleware Binaries in the first drop down menu

Select "Unstable"in the second drop down menu

PrimeSense NITE Unstable Build for Mac OS X 10.7 (latest version)

Click on "Download".

Extract the tarball and run the installer script.

sudo ./install.sh

Normally you will get:

Installing NITE

***************

Copying shared libraries... OK

Copying includes... OK

Installing java bindings... OK

Installing module 'Features_1_3_0'...

ls: Features_1_3_0/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_3_1'...

ls: Features_1_3_1/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_4_1'...

ls: Features_1_4_1/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_4_2'...

ls: Features_1_4_2/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_5_2'...

Registering module 'libXnVFeatures_1_5_2.dylib'... OK

Copying XnVSceneServer... OK

Installing module 'Features_1_5_2'

ls: Hands_1_3_0/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_5_2'

ls: Hands_1_3_1/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_5_2'

ls: Hands_1_4_1/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_5_2'

ls: Hands_1_4_2/Bin/lib*dylib: No such file or directory

Installing module 'Features_1_5_2'

registering module 'libXnVHandGenerator_1_5_2.dylib'...OK

Adding license.. OK

*** DONE ***

Run some example

Copy all the xml files from NITE Data to the SensorKinect Data folder. I prefer the terminal way, you may use drag n drop GUI method.

cp Data/*.xml ../SensorKinect/Data/

Now browse onto the Samples dir ( /NITE/Samples/Bin/x64-Release) and launch the the samples. For example let's run the Sample-SceneAnalysis.

Somehow (magically) the Kinect sensor detected myself (body) as blue, letting the background motion in grey.

Let's try another one. Let's try the PointViewer! Clap your hands or make a wave-like gesture and hand tracking is about to begin.

OSCeleton

OSCeleton is a proxy that sends skeleton information collected from the kinect sensor via OSC, making it easier to use input from the device in any language / framework that supports the OSC protocol.

So, clone the project hosted at GitHub, but make sure your are in OpenNI directory.

cd OpenNI

git clone https://github.com/Sensebloom/OSCeleton.git

now compile it

make -j4

I use -j4 for my 4 cores and way faster compiling ;) If you have HT enabled, try -j8 (in case of four native cores plus 4 visual threads). If everything worked, you can now test OSCeleton. In the folder "Sensebloom-7307683-OSCeleton" double-click the executable "OSCeleton" or open it in Terminal.Keep a distance of two steps away from Kinect and stay in position of calibration, with both arms raised, as shown below:

Thanks to Alexandra leaving me mess around with her room :PPP

You should see in terminal:

Initializing...

Configured to send OSC messages to 127.0.0.1:7110

Multipliers (x, y, z): 1.000000, 1.000000, 1.000000

Offsets (x, y, z): 0.000000, 0.000000, 0.000000

OSC Message format: Default OSCeleton format

Initialized Kinect, looking for users...

New User 1

Calibration started for user 1

Calibration complete, start tracking user 1

Install Processing and libraries

Go to

then drag and drop the application into /Applications dir in order to have it installed. The mac way ;)

Download examples

Go to GitHub and clone the OSceleton examples.

https://github.com/Sensebloom/OSCeleton-examples.git

then go to cd OSCeleton-examples/processing/MotionCapture3D/

cd OSCeleton-examples/processing/MotionCapture3D/

and create a libraries folder

mkdir libraries

Inside that folder extract the two libraries need

oscP5 -

pbox2d -

Ok now run the OSceleton again in order to calibrate yourself (again) and in the same time launch MotionCapture3D.pde with Processing. Click play and voila:

Thanks to

for the tutorial and ideas.